But HPC and The Cloud Can Rule On.

High-Performance Computing, while immensely powerful, faces several challenges as it continues to evolve and respond to exponential demands for computational power.

With the end of Moore’s Law looming ever closer, Owen Thomas, founder of Red Oak Consulting, argues that scientific computation will continue to thrive regardless.

Moore’s Law, formulated by Gordon Moore in 1965, predicted that the number of transistors placed on a single square inch of an integrated circuit chip would double every two years, leading to an exponential increase in computing power.

This Law has had profound implications for the development of

HPC and the evolution of

cloud computing, shaping the landscape of modern technology.

The ‘death’ of Moore’s Law has been a hot topic in the HPC community for a long time.

Since its formulation there has been about a one trillion-fold increase in the amount of computing power being used in predictive models, such as weather forecasting, to take just one example.

To improve these high-performance models further, however, we need exponentially more computing power. Without these gains, the necessary gains in accuracy will diminish.

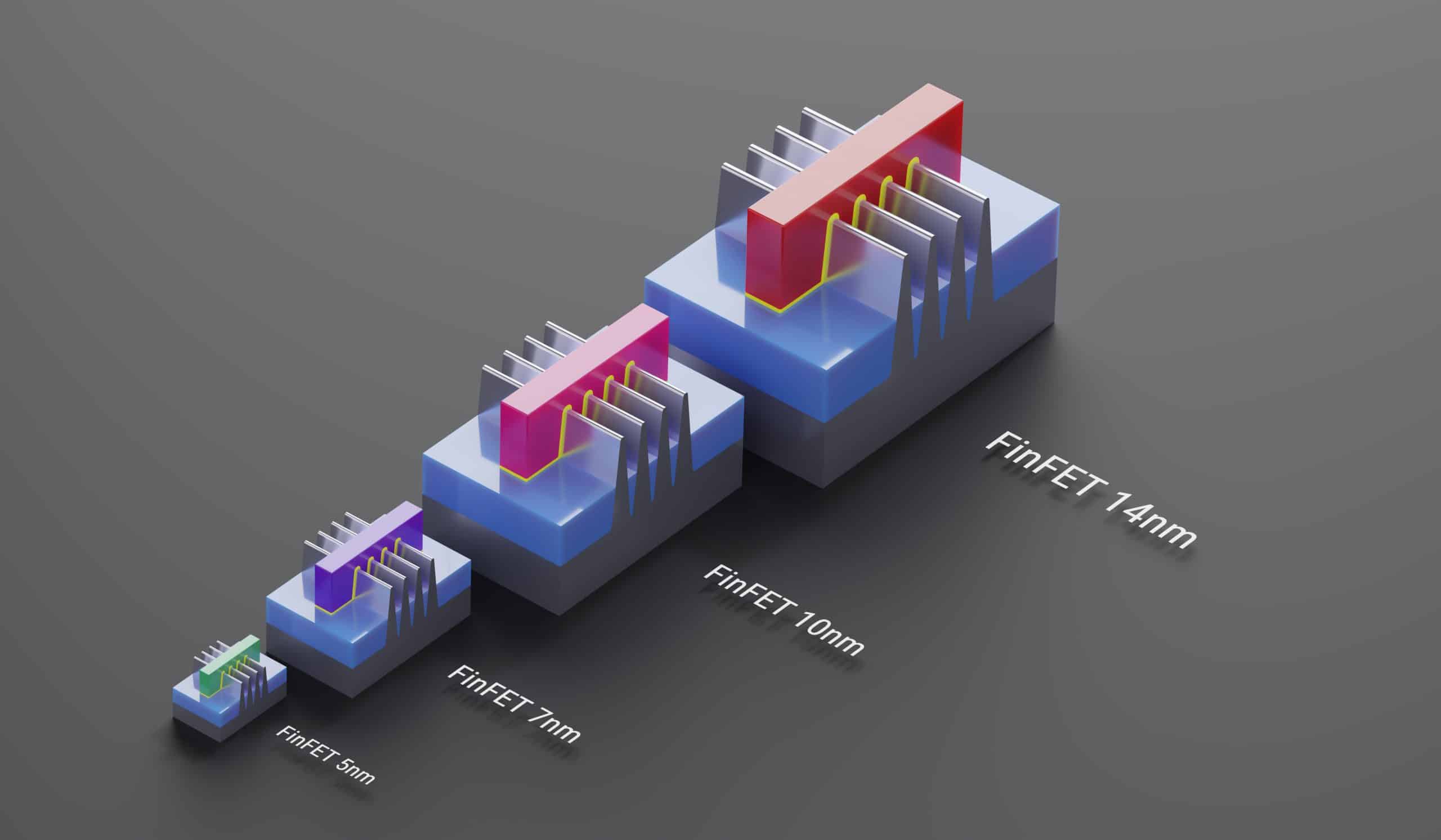

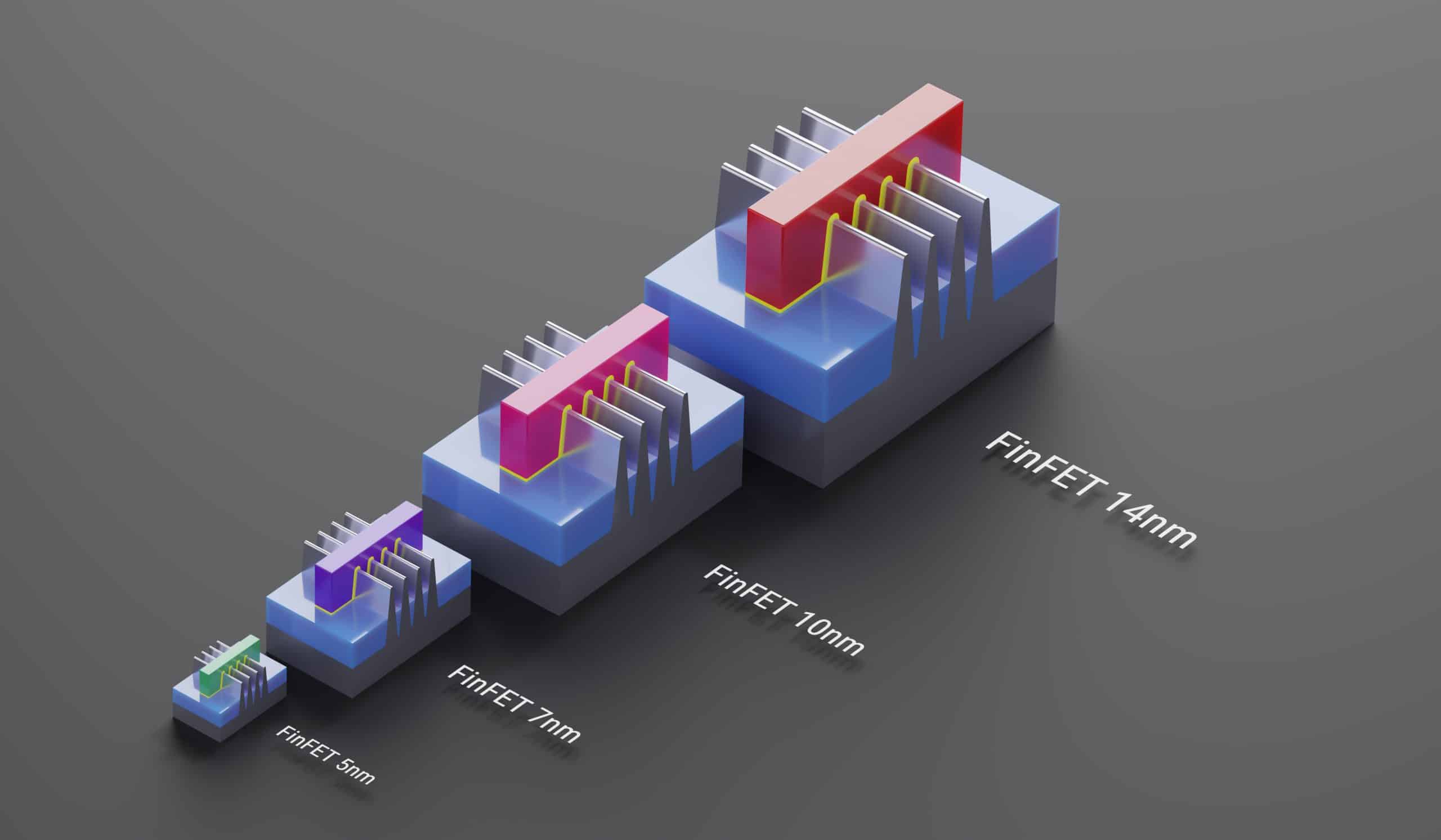

Whilst much has been made of rising costs alongside shrinking space available for the growing number of semiconductor chips involved in HPC compute, we could be forgiven for thinking that we are close to reaching the limits in available computational power.

But that’s not necessarily the case, indeed the

Cloud will continue to be the principal catalyst for realising HPC’s impact upon

research and higher education, so long as we all work better with the tools we have to improve efficiencies and outcomes.

Much of that will be down to training, and much also down to funding, but crucially, it’s about understanding where the true power lies, where

petabytes of data are processed in milliseconds.

Currently, we are in the

multicore chip phase, and we’re starting to get computing power generated in a different way.

In a recent

paper examining ways to improve performance over time in a post-Moore’s Law world, the authors landed on three main categories:

software-level improvements;

algorithmic improvements; and

new hardware architectures.

This third category is experiencing the biggest moment right now, with GPUs and field-programmable gate arrays (FPGAs) entering the HPC market and ever more advanced and bespoke chips emerging. All of which will help researchers and wider HPC users as More’s Law passes the baton on.

Scalability Has Been Key

The scalability and cost-effectiveness driven by Moore’s Law have significantly influenced the development of

cloud computing.

The ability to pack more transistors onto a chip has led to more powerful and affordable hardware, making it feasible for cloud service providers to offer robust computing resources at a lower cost whereby cloud computing leverages the principles of virtualisation and on-demand resource allocation.

The technologies and innovation sitting behind

Moore’s Law have empowered cloud providers to continually enhance their infrastructure, providing users with the ability to scale up or down as needed.

Furthermore, the rapid evolution of semiconductor technology has spurred innovation in cloud services.

Cloud providers can leverage the latest hardware advancements to offer new and improved services to their users.

This continuous cycle of innovation enhances the agility of cloud platforms, allowing them to adapt to changing technological landscapes.

While growth of

HPC and the Cloud aligns with Moore’s predictions, it faces challenges such as physical limitations and the diminishing returns of miniaturisation.

As transistors approach atomic scales, alternative technologies such as

quantum computing may become necessary for sustaining the pace of progress.

Getting The Maximum Out of HPC in The Cloud

It is now more important than ever to expand capabilities with what is available and look to get the maximum out of HPC through avenues such as

HPC in the Cloud.

Workload optimisation will need to lead to more efficiency for universities and research institutions to get the most out of the power they have at their fingertips.

Often, we witness HPC environments that aren’t working to full capacity, whether that be down to a knowledge gap from within, or just down to funding cycles dictating when and where peaks and troughs in workloads occur.

This, I suggest, is one of the gaps that needs to be closed and it’s one that we ourselves strive to achieve with our customers.

Meanwhile, there are wider factors to consider such as cost – long or short term, who maintains and manages the infrastructure (if it’s on premise), and the available skills, and time, to oversee demands.

None of this should stop cloud based HPC bringing considerable power and potential to these situations.

Over time needs will evolve, as indeed does the nature of support that a researcher or in-house enterprise IT team requires.

What is critical, however, is that as universities and research departments evolve, any transitions to a robust managed service arrangement need to be seamless so as not to hinder their wider work, or indeed impact the very results

HPC was intended for.

Final Thoughts

The history of HPC shifts from different ways in generating computational speed.

The shift to exploiting multicore architectures is the most recent example but this too is coming to an end.

A U.S. National Academies study concludes that business-as-usual computing would not be adequate for the post-exascale – that’s 18 noughts – era.

Technical computing has now shifted to AI and hyperscalers – the ability to scale to demand.

Despite everything, there are numerous ways in which Moore’s Law is still guiding industries to look at new ways of enhancing

computational power.

Mills, for example, shows that the combination of

micro chip advances and

macro architectural innovations such as the Cloud have combined to keep

Moore’s Law going strong.

The reality is that fewer vendors are likely to accept the financial and technical risks of a paradigm that relies on ever-larger machines powered by untested hardware, and the

HPC community is shifting its perspective.

Cloud service providers now offer a variety of

HPC clusters of varying size, performance, and price, the key is to ensure that optimal impact is being unlocked by robust support, training and understanding.

OWEN THOMAS

OWEN THOMAS

SENIOR PARTNER AND CO-FOUNDER

This Law has had profound implications for the development of HPC and the evolution of cloud computing, shaping the landscape of modern technology.

The ‘death’ of Moore’s Law has been a hot topic in the HPC community for a long time.

Since its formulation there has been about a one trillion-fold increase in the amount of computing power being used in predictive models, such as weather forecasting, to take just one example.

To improve these high-performance models further, however, we need exponentially more computing power. Without these gains, the necessary gains in accuracy will diminish.

This Law has had profound implications for the development of HPC and the evolution of cloud computing, shaping the landscape of modern technology.

The ‘death’ of Moore’s Law has been a hot topic in the HPC community for a long time.

Since its formulation there has been about a one trillion-fold increase in the amount of computing power being used in predictive models, such as weather forecasting, to take just one example.

To improve these high-performance models further, however, we need exponentially more computing power. Without these gains, the necessary gains in accuracy will diminish.

Whilst much has been made of rising costs alongside shrinking space available for the growing number of semiconductor chips involved in HPC compute, we could be forgiven for thinking that we are close to reaching the limits in available computational power.

But that’s not necessarily the case, indeed the Cloud will continue to be the principal catalyst for realising HPC’s impact upon research and higher education, so long as we all work better with the tools we have to improve efficiencies and outcomes.

Much of that will be down to training, and much also down to funding, but crucially, it’s about understanding where the true power lies, where petabytes of data are processed in milliseconds.

Currently, we are in the multicore chip phase, and we’re starting to get computing power generated in a different way.

In a recent paper examining ways to improve performance over time in a post-Moore’s Law world, the authors landed on three main categories: software-level improvements; algorithmic improvements; and new hardware architectures.

This third category is experiencing the biggest moment right now, with GPUs and field-programmable gate arrays (FPGAs) entering the HPC market and ever more advanced and bespoke chips emerging. All of which will help researchers and wider HPC users as More’s Law passes the baton on.

Whilst much has been made of rising costs alongside shrinking space available for the growing number of semiconductor chips involved in HPC compute, we could be forgiven for thinking that we are close to reaching the limits in available computational power.

But that’s not necessarily the case, indeed the Cloud will continue to be the principal catalyst for realising HPC’s impact upon research and higher education, so long as we all work better with the tools we have to improve efficiencies and outcomes.

Much of that will be down to training, and much also down to funding, but crucially, it’s about understanding where the true power lies, where petabytes of data are processed in milliseconds.

Currently, we are in the multicore chip phase, and we’re starting to get computing power generated in a different way.

In a recent paper examining ways to improve performance over time in a post-Moore’s Law world, the authors landed on three main categories: software-level improvements; algorithmic improvements; and new hardware architectures.

This third category is experiencing the biggest moment right now, with GPUs and field-programmable gate arrays (FPGAs) entering the HPC market and ever more advanced and bespoke chips emerging. All of which will help researchers and wider HPC users as More’s Law passes the baton on.

Technical computing has now shifted to AI and hyperscalers – the ability to scale to demand.

Despite everything, there are numerous ways in which Moore’s Law is still guiding industries to look at new ways of enhancing computational power.

Mills, for example, shows that the combination of micro chip advances and macro architectural innovations such as the Cloud have combined to keep Moore’s Law going strong.

The reality is that fewer vendors are likely to accept the financial and technical risks of a paradigm that relies on ever-larger machines powered by untested hardware, and the HPC community is shifting its perspective.

Cloud service providers now offer a variety of HPC clusters of varying size, performance, and price, the key is to ensure that optimal impact is being unlocked by robust support, training and understanding.

Technical computing has now shifted to AI and hyperscalers – the ability to scale to demand.

Despite everything, there are numerous ways in which Moore’s Law is still guiding industries to look at new ways of enhancing computational power.

Mills, for example, shows that the combination of micro chip advances and macro architectural innovations such as the Cloud have combined to keep Moore’s Law going strong.

The reality is that fewer vendors are likely to accept the financial and technical risks of a paradigm that relies on ever-larger machines powered by untested hardware, and the HPC community is shifting its perspective.

Cloud service providers now offer a variety of HPC clusters of varying size, performance, and price, the key is to ensure that optimal impact is being unlocked by robust support, training and understanding.