Roco’s Recap June 2025

June 2025 Roco’s Recap In case you missed it: Life’s only getting faster, and staying current with major breakthroughs in

Home » Benchmarking

Why is Benchmarking Essential for HPC Success?

Our consultants translate benchmarking results into actionable insights, guiding you towards the optimal HPC solution that maximises performance and ROI.

We delve into your workflows, analysing their behaviour and resource utilisation throughout their lifecycle.

We conduct rigorous benchmarks to assess factors like cache efficiency, memory usage, and core latency, providing a holistic picture of your system's performance.

When exploring cloud migration, we recommend cost-effective SKUs and strategies like reserved instances or spot instances to optimise your cloud expenditure.

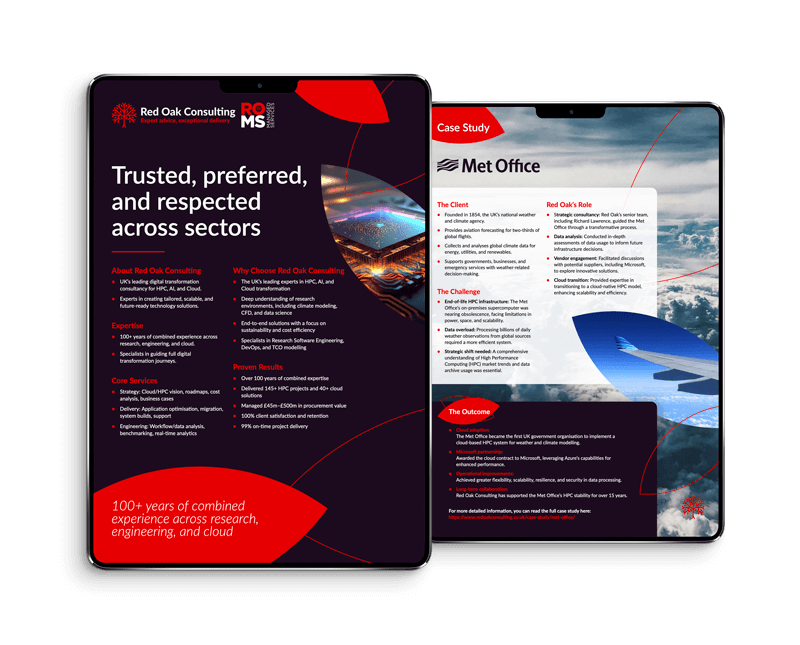

Red Oak’s expert knowledge of the Microsoft Azure Ecosystem, as well as their expertise in HPC and specific choice of VMs, enabled us to successfully transition all our on-premises HPC workflows to the cloud. The use of CycleCloud, to implement our batch files dependent workflows was particularly valuable.”

At Red Oak Consulting, we are committed to maximising performance while optimising costs, every step of the way. Contact us today and unlock the full potential of your HPC infrastructure

High-Performance Computing projects delivered

HPC projects delivered on time

HPC procurements ranging from £100K to £500 million

Proven track record of customer satisfaction

HPC in the Cloud projects

Most obviously, it allows you to understand the performance of an application or workflow on a particular system (including the per application characteristics which hit or create system bottlenecks).

Having a performance baseline for a range of applications allows comparison of performance or capability between different systems (including prospective replacements as part of a procurement activity) or simply help in identifying misconfigured installations.

Having a performance baseline for a range of applications improves the ability to identify unexpected performance regressions after software security patches, firmware, and operating system updates and associated changes in software dependencies (such as libraries and runtimes).

Benchmarking HPC applications allows you to track performance improvements or regressions over time which can help with prioritising RSE efforts in the areas that will provide the most benefit.

Functional testing ensures that the answer is correct (an important initial step when optimisation of an application has been undertaken) while performance benchmarking measures the time taken (to produce a correct answer – which you should still check btw) for different configurations under test (eg number of cores, parallelisation strategies incl. MPI threads etc).

Benchmarking in HPC really looks at system level performance, with the emphasis on producing a balanced system (compute, memory, fabric and storage) which runs well under a variety of workloads and conditions.

For a variety of reasons High Performance Linpack (HPL) is one of the most common benchmarks but it is perhaps the one least suited to testing a system to simulate normal operation. The best benchmarks are usually the applications which consume the most cycles on your current system, since improvements in runtime will likely increase productivity and allow improvements to the scientific fidelity of results.

Sign up to our newsletter to stay up to date with all the latest news and advancements in High-Performance Computing

June 2025 Roco’s Recap In case you missed it: Life’s only getting faster, and staying current with major breakthroughs in

Introduction Last year, I was faced with a user issue that initially confused me. A user running open-source CFD software

Innovation, Efficiency, and a Few Thousand Steps In our pre-ISC blog, we looked ahead with excitement at what ISC 2025

36 Pure Offices

Cheltenham Office Park

Hatherley Lane

Cheltenham GL51 6SH

United Kingdom

Telephone: +44 (0)1242 806 188

General Enquiries

info@redoakconsulting.co.uk

Sales Enquiries

sales@redoakconsulting.co.uk

© Copyright Red Oak Consulting 2024 | Privacy Policy | Modern Slavery Policy

Get in touch with our team of HPC experts to find out how we can help you with your HPC, AI & Cloud Computing requirements across:

Call us on

+44 (0)1242 806 188

Experts available:

9:00am – 5:30pm GMT

Because in a fast-moving market, staying connected matters — and timing is everything.

"*" indicates required fields